Introduction: A Completely 3D Printable Mixed Reality Video Game (Pseussudio)

Given the imminent release of the Project Tango 3D scanning smartphone from Google and Lenovo, as well as the likely 3D scanning-capable iPhone 7 Plus from Apple, I decided to create a rough proof-of-concept video game to demonstrate a complete mixed reality ecosystem in which physical content is brought into the digital world with 3D scanning and pushed back out into the physical world with 3D printing.

This Instructable will walk you through the use of a consumer 3D scanner (the Structure Sensor from Occipital) to capture reality data (my entire apartment, myself, and people from my life), how to import it into Unity and the coding for a simple video game. Pretty much all of the assets in this game are 3D printable!

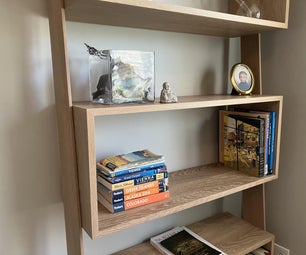

Step 1: Read a Few Books for Inspiration

If you want to build your own mind-bending reality simulator, you'll need to get familiar with a few great books: VALISby Philip K. Dick, The Doors of Perception by Aldous Huxley, and, if you've got time, Gravity's Rainbow by Thomas Pynchon. If you don't have time, head to Peru for some ayahuasca.

Step 2: Capturing Reality Data

I fell in love with the Structure Sensor from Occipital after reviewing it for 3D Printing Industryin 2014. This depth sensor, based partially off of technology from Kinect inventors PrimeSense, works with most newer Apple smartphones and tablets, though color 3D scanning is only available for iPads at the moment. Occipital has since developed a new platform-independent 3D sensor called the Structure Core, which can be embedded into various devices to run their Bridge Engine.

I finally got my own Structure for Christmas in 2015 and used it to scan my wife, Danielle, and two friends, Tony and Melena. The ItSeez3D app is awesome for 3D scanning people and some small objects, creating full-color, 3D printable scans with ease. By exporting all of as .obj files, we were all made 3D printable quickly, with each scan taking about a minute to perform and 10 minutes to process. Not all of the details came out perfectly. Most of our hands look really funky.

Step 3: Capturing Reality Data Part 2

Capturing our apartment was not so easy. Though the Structure has its own room scanning app, it's not quite powerful enough to scan a complete room. Skanect, also from Occipital, works a bit better, but I still had trouble capturing every detail of a room in a single scan. Because this was a proof of concept, however, it wasn't entirely necessary for me to do so! So, I just captured most of the rooms in my apartment as best I could. A sloppy scan is easy enough and Skanect allows users to save their models as watertight, full-color models, making them more or less 3D printable. I say more or less because there is a lot of data one would need to clean up in order to 3D print these models. However, if I had the time and skills, I could have made our townhouse a lot better suited for 3D printing.

Step 4: Building a Simple Unity Game

Draging items into Unity is easy, but building the game is a little harder. I found a tutorial called Roll-A-Ball, which goes through the steps of making a simple game in which a player picks up various items to reach a certain score.

Then, I replaced each item one by one, until it wasn't about a ball rolling around and hitting rotating cubes, but about a human and his search for meaning in the world. This was when things got tough! I began with a copy of me that just rolled around like the ball around a blank world.

To get me to move like a person, I cheated a little bit. I downloaded and imported this pre-made Mecanim Demo from Mixamo. Then, I found the "DemoScript" from the the Mecanim folder and dragged it, along with all of the associated animations, onto my character. Automagically, this worked! All of a sudden, Mike was a regular walking dude!

I then replaced all of the rotating cubes with a 3D scan of my wife, Danielle. Danielle's texture map was altered in Photoshop to make her look all funky. This is a super easy thing to do and results in some pretty cool models, if I do say so myself.

I've uploaded the scripts used in this game here. The DemoScript not only controls the character onto which it's attached, but also counts the number of "Pick Up" items that the user picks up until it gets to 3, at which point the game is over. It also includes coding for the sounds in the game, which you'll learn a tad more about in a future step. The Main Camera is attached to the player so that it follows him around. The Rotator Script just causes the heads to rotate and to disappear when the player walks into them.

I've also uploaded the Animator Controller and the animations, so, if you create your own game, you should be able to drag the DemoScript, the Controller, and the animations onto your character and have them walking!

Attachments

Step 5: Importing Reality Data Into Unity

I'm a novice when it comes to most of the technology described in this Instructable. More of an idea man, if you will! This is especially true for Unity. This game is my second proof-of-concept and it shows (You can see my first proof-of-concept game here). Importing 3D models into Unity is simple as heck, though. It's recommended that you create folders for individual assets and store them in the project's "Assets" folder. So, I created separate folders for "People" and "3D models" (the rooms of my apartment). Then, all you've got to do is drag and drop!

I moved all of the rooms of my townhouse around until they lined up with our real world set up. As you can tell, the scans are pretty bad, but the end result is something surreal that reflects our subjective experiences of reality. This is what the world looks like to me! All droopy, weird, and a little bit scary, given the lack of definite ontological certainty provided to us by the Universe.

A lot of luck went into this game. For instance, when importing the models, I found that my character would walk on top of the bits of my apartment, so having him walk around the rooms was instantaneous!

Step 6: Adding a Skybox With Google StreetView

As my game is all about accurately reflecting reality through high-resolution scans and realistic game play (jk!), I wanted to import my actual neighborhood into the game. There are a few ways to do this, depending on your Unity experience and your wallet.

There is a toolkit for Unity called MapNav that allows users to use Google Earth data for their games, but it costs $55. This is for those creating intense GPS apps with a lot of capabilities. I also stumbled across this open source project that allows you to create an entire world with Google StreetView. The StreetView coordinates shift as your player moves throughout the game. I'm not good enough with Unity to implement this, so I went with the easier and laziest option: a free Unity app called "StreetView to SkyBox". This simple app imports the coordinates of a specific StreetView location and turns it into a SkyBox (the six-sided cube that makes up the background of your world). So, my neighborhood isn't dynamic, but it sure is weird!

Step 7: Importing Friends & Family

Animating scans of people is really, really easy. Bringing animated characters into the game, however, was a little tricky to get the hang of.

Adobe recently acquired a software firm called Mixamo, which developed a free, online tool for adding animations to 3D models. Whether you design one yourself (I recently tried Adobe Fuse [previously Mixamo Fuse], which made creating a bipedaling, human-esque character a cinch) or use a 3D scan, you just upload your model to Mixamo, rig the model by dragging a series of points onto the character, and voila! You can then browse a fairly extensive library of animations and apply them to your character.

For all of my characters, I've attached various Mixamo animations (my wife dancing, my friends Tony and Melena rockin' out, and me jumping). Downloading these characters for Unity requires that you select a couple of specific options in Mixamo ("FBX for Unity" and "Skeleton Only").

Then, when you import your characters to Unity, there are a few things you need to do to get them to work properly in the game. Mixamo has a great tutorial about how to do this. I'm not good enough at coding to get these characters doing too much, but I was able to get them all dancing around! I checked the "Loop" box on my animations so that they loop endlessly.

One issue not covered in the Mixamo tutorial is texture issues associated with importing these sorts of scans (they can look all grainy and over-exposed). Fortunately, I got some help from the Structure Sensor forum. When importing these FBX files, you'll need to change the shader on the texture maps to "Unlit -> Texture" and this should correct the issue! Thanks Structure users, Alex and Jim!

Step 8: Adding Sounds & Misc. Details

Adding sounds to a Unity game is pretty straightforward. You can add a background track to a level simply by dragging a song onto the Main Camera. I've chosen "Sussudio" by Phil Collins.

Each time my character picks up a floating head, a grunt from Prince is made. This is done through a bit of coding on the main character's DemoScript, which I mentioned earlier. Then, when the final head is picked up, I have Alan Watts performing an infectious laughing meditation. This is also done through a bit of coding in the main character's DemoScript. These two sounds can be replaced through a drag and drop interface.

Step 9: Publishing the Whole Works

After I created the flashiest menu screen the world of gaming has ever known, my game was ready to be played online. I set my Build Settings to "webplayer", clicked "Build and Run" and had myself my very own video game.

Making it so that others could play it was a bit more difficult. I followed this tutorial from Unity describing the use of Unity's Cloud Build Engine, which allows most users with Internet access to play Unity games for free, and SourceTree, which ensures that your Cloud Build stays up to date with the game on your desktop by syncing the two.

My game is playable online here. So far, it can be played on the most up to date version of Firefox, but doesn't seem to work in Chrome. If you can't get it to work, I've also embedded a video walkthrough.

Step 10: The Future of Mixed Reality Gaming

For my game, I've 3D printed the floating heads that my player collects throughout the level. As I said, the level itself is 3D printable, but not quite pretty enough to 3D print! I have yet to 3D print the player and the characters themselves. I used Sculpteo and i.materialise to 3D print the heads in full-color sandstone, but could have also used Shapeways, another service bureau, or a local 3D printer on 3D Hubs. For a past project, I've used Mark's Lil 3D Printing Hub on 3D Hubs and was super happy with his services.

Incidentally, my characters can already be viewed in VR, thanks to Sketchfab, which has made it possible to view 3D models with Google Cardboard and, I believe, Oculus Rift. I think they're also working on making it viewable with the HTC Vive, as well, as they recently announced compatibility with Steam. Because Sketchfab has partnered with Facebook, these models can be shared through Facebook, too.

My very rough proof-of-concept, however, is only the beginning. A number of gaming companies have already embraced 3D printing as a means of creating custom merchandise. Startups like FabZat, Whispering Gibbon, and Toyze focus on making game assets customizable and 3D printable, but, when 3D scanning is introduced to consumers, the entire gaming ecosystem will change. Users will be able to 3D scan their faces and import them into games, where they will be able to modify them before playing. Then, players will freeze scenes to create exportable, 3D printable items. And, once the software is more fully realized, they'll even be able to create their own levels with scans from their everyday lives.

This is taken even further once we introduce virtual reality and augmented reality platforms, as well as haptic devices. Soon, users will map digital content over their physical worlds, seamlessly transporting content to and from their virtual environments with 3D printing and 3D scanning. Haptic devices will make digital items feel almost real, while improvements in software and computing will make them look almost real. Then, once we can 3D printing evolves to the point that we can print at the atomic level, it's possible that we can 3D print with the basics of matter itself. When I print a copy of myself, it really will be me, bones, skin and all. Heck, we might even 3D print a copy of our universe.

With enough time, the lines between physical and virtual will be indistinguishable, resulting in a pseudo world... a pseussudio world... Who knows? Maybe the world we live in once started as a proof-of-concept video game that evolved into a completely functioning simulacrum in which the players, in their search for meaning, create a proof-of-concept video game that evolved into a completely functioning simulacrum in which the players, in their search for meaning, create a proof-of-concept video game that evolved into a completely functioning simulacrum in which the players, in their search for meaning, create a proof-of-concept video game that evolved into a completely functioning simulacrum in which the players, in their search for meaning...

Participated in the

3D Printing Contest 2016